Artificial Intelligence can officially be traced back to the advent of computers, in 1956, although it was theorised a few years earlier by Alan Turing in the ComputingMachinery and Intelligence magazine. It can be defined as the branch of computer science that enables in machines the computational and logical capabilities usually considered typical of humans, such as decision-making capacity, space-time perception of the environment where the machine is located and the ability to recognise images and objects. This is made possible by programming and designing both at the hardware and software level.

Therefore, the machine goes beyond the simple ability to compute or to recognise/process data, but shows examples of other forms of “intelligence”, such as those noted by Howard Gardner in his “Frames of Mind” essay in 1983. His Theory of Multiple Intelligences is based on the idea that all human beings have at least seven forms of “mental representation”, including spatial, social intelligence, kinesthetic and introspective. The machines built in the AI field are able to reproduce these types of intelligence.

More recently, the European Commission through its dedicated team (see box) proposed the following definition (April 2018).

● Artificial intelligence (AI) refers to systems that exhibit intelligent behaviour and that are able to analyse their environment and take action – with some degree of autonomy – to achieve specific goals.

● Artificial intelligence systems can be purely software based (e.g. voice assistants, image analysis software, search engines, speech and facial recognition systems) or AI integrated into hardware devices (e.g. advanced robots, self-driving cars, drones or smart sensors).

● Reasoning and Decision Making

● Learning

● Robotics

| Sensors for perceiving the environment | For example cameras, microphones, a keyboard or other input devices, as well as physical sensors to measure temperature, pressure and distance for example. An AI system needs adequate sensors to perceive data in the environment that is relevant to the assigned objective. |

| Processing algorithms and decision-making algorithms | The data captured by the sensors is processed by the AI reasoning module, which uses it to carry out the correct action based on the objective to be achieved. The data becomes information. |

| Decision implementation | Once the action has been decided on, the AI system activates the actuators at its disposal, not only physical but also software based. It is important to note that rational artificial intelligence systems do not necessarily always choose the best action for their objective, due to limitations in resources such as time or computing power. |

At the European Community level, the High-Level Expert Group on Artificial Intelligence (AI HLEG) was established in 2017. The overall objective of this group of 52 high-profile artificial intelligence experts is to support the implementation of the European strategy on the issue. One of its tasks is to formulate recommendations on the development of policies relating to the future of AI and ethical, legal and social issues.

Through literature and cinema, man has always reflected on the possible interaction with artificial intelligence. The first fictional character to be considered as such is Mary Shelley’s Frankenstein (1818), made into a film in 1931. Not a creature made of metal and silicon, as we are used to thinking about today, but nonetheless something not human being brought back to life thanks to the wonder of technology, who discovers free will and fights for it by challenging his own creator.

It is a concept that we come across time and time again in many later works, starting with Karel Čapek’s Czech 1920’s drama “RUR”. Robota in Czech means work and in this work the “machines” built to replace us in the most dangerous and arduous jobs end up becoming increasingly intelligent, learning not only notions and actions but also feelings. Discrimination, fear and a lack human sensitivity are often the reason for their rebellio

Isaac Asimov, considered one of the founders of science fiction literature, outlined the three laws of robotics:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

These laws are valid as long as, Asimov writes in his books, they do not conflict with the Zeroth Law, A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

It is impossible to mention all the suggestions coming from literature and film relating to this theme, but the following 3 are the most common types of artificial intelligence we see in cinema. For film buffs, an exhaustive list can be found here.

Research by the Artificial Intelligence Observatory of the School of Management of the Politecnico di Milano demonstrates how 96% of Italian companies that have already implemented AI solutions in their business do not see the effects of machines substituting the role of humans.

Artificial intelligence is involved in people’s daily lives more than we imagine. In the field of medicine, for example, digital technology is increasingly used in the diagnosis of diseases such as Parkinson’s and Alzheimer’s, but it also features in apparently sacred fields such as art, in October 2018, an AI painting was sold by Christie’s for over 430,000 dollars. And if we are used to (aware of?) being guided in our shopping experiences (Amazon & co) and in our viewing habits (Netflix above all), we will eventually get used to managing our financial investments with customer care services managed by forms of artificial intelligence, such as the Bank of America’s assistant Erica.

The terms “Strong” and “Weak” in relation to Artificial Intelligence were coined by John Searle, who wanted to emphasise the different performance levels of different types of machines.

| Weak AI | Strong AI |

| A small application with a limited objective. | Wider application with a broader reach. |

| Good application for specific tasks. | This application has human level intelligence. |

| Uses human-supervised learning and not for data processing. | Uses clustering and binding to process data. |

| Examples: Vocal assistant | Examples: Advanced Robotics |

Narrow AI

Systems designed to solve a single problem. By definition, they have limited capabilities: they can recommend an e-commerce product or provide a weather forecast. They are able to mimic human behaviour, but can only excel in environments with a limited number of parameters.

General AI

The type of Artificial Intelligence closest to human intelligence. Some scientists suggest that an AI capable of relating to the social environment in the same way that a human being would do is close to being created. The current gap is not in their ability to analyse and process data (where they are already far superior to us), but their inability to think in an abstract way and have original ideas.

Super AI

Theoretically, this AI surpasses human intelligence in ways we cannot currently even imagine. A super AI would be better than us at everything, but here we enter the realm of science fiction. Its abilities would include decision making, rational decisions and even forming emotional relationships.

Machine Learning is the science that allows machines to interpret, process and analyse data to solve real-world problems. At a basic level, ML means providing data to a computer to train it to perform a task. The machine – the algorithm – improves its performance based on the data it receives.

Most machine learning requires constant input from humans and is fed with mostly labelled datasets, although unsupervised algorithms also exist.

There are three categories in Machine Learning:

- Supervised learning

- Unsupervised learning

- Reinforcement learning

Deep Learning is a superior version of Machine Learning that can, in a real environment, learn by levels of abstraction.

A striking example of this is Sophia, the android able to reproduce over 60 human facial expressions and take part in high-level debate.

Robotics is a branch of Artificial Intelligence which focuses on different branches and application of robots. AI Robots are artificial agents acting in a real-world environment to produce results by taking accountable actions.

Sophia the humanoid is a good example of AI in robotics.

An Expert System is a computer system based on Artificial Intelligence capable of learning the decision-making skills of a human expert and replicating them.

An ES uses notation based onif-then logic to solve complex problems. Therefore, it is not based on conventional procedural programming. Included in the main application fields of this branch of AI, are loan analysis, virus detection, information management and the activities of medical facilities.

Fuzzy logic can be seen as an evolution of the Boolean logic of “0-1”. Unlike the latter, it is an IT approach based on the principles of degrees of truth and is therefore able to tackle even ambiguous contexts. For example, FL is used in the medical field to solve complex problems related to the decision-making process, but also in environmental control for self driving vehicles or, again in relation to cars, for the management of automatic gearboxes.

Natural Language Processing (NLP) refers to the science of drawing insights from natural human language in order to communicate with machines and grow businesses.

Twitter uses NLP to filter out terroristic language in their tweets, Amazon uses NLP to understand customer reviews and improve user experience.

From the most recent statistics, it appears that worldwide, overall funding in the field of Artificial Intelligence is mostly aimed at building Machine Learning applications and platforms (almost $28.5 billion in mid-2019). The current scenario is still limited to Narrow AI.

But what will happen in the near future, when forms of AGI (Artificial General Intelligence) and ASI (Artificial Super Intelligence) begin to develop?

Despite what we may think, having being conditioned by “cinematic” imaginary, current business interests in the sector are not focused on company robots or those at the service of our daily activities. The most pressing sector in the AI world is currently that involving the Recognition, classification and labelling of static images ($8 billion), followed by Performance improvement in algorithmic trading strategy “($7.5 billion) and the efficient and scalable processing of patient data ($7.4 billion). The scenario will be a little less futuristic and more “applicative”, at least until 2025

Interview with Gianluca Toscano, Technology Leader Teoresi Group (december 2020)

Interview with Gianluca Toscano, Technology Leader Teoresi Group (december 2020)

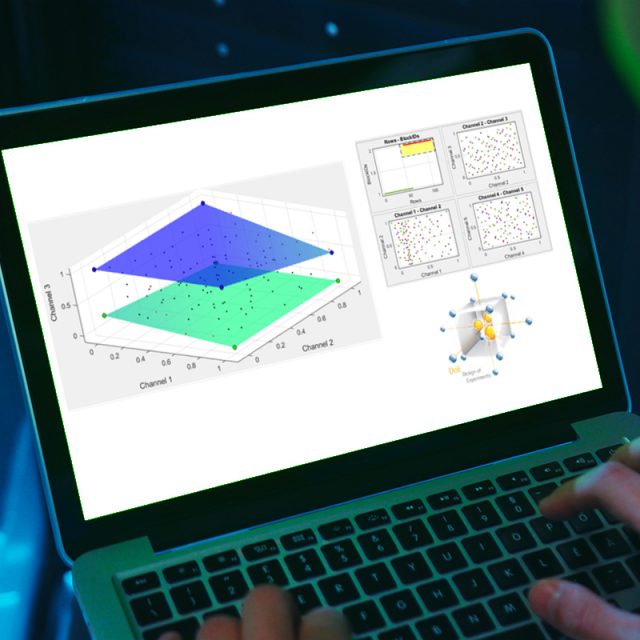

This software can produce reduced experimental acquisition plans, using a “space filling” approach, and build models based on these acquisitions to simulate real measurements spanning the entire domain of interest.

For complex systems (e.g. engine control) space filling techniques are preferable. In this particular case we used Sobol sequences.

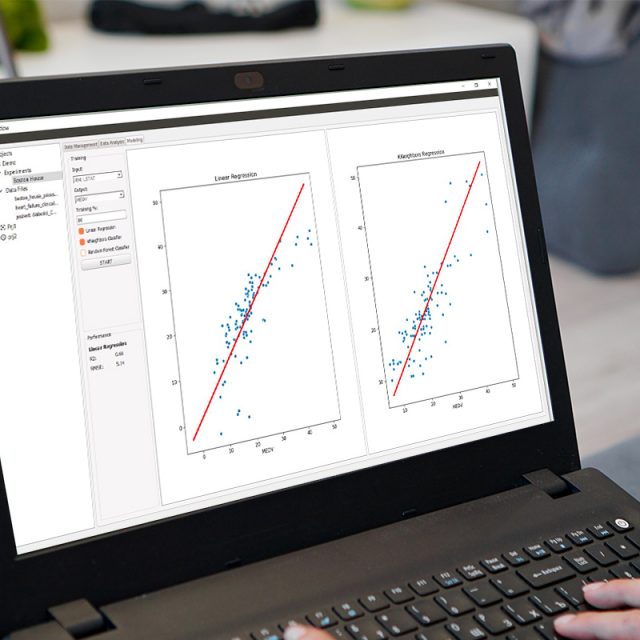

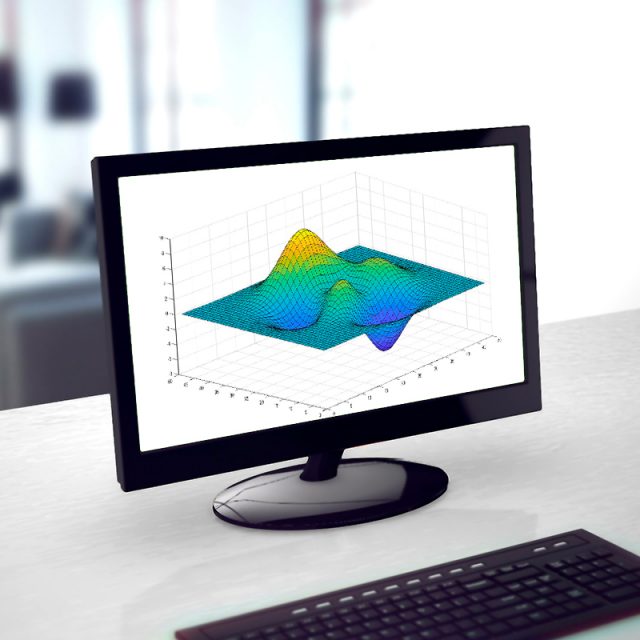

It is software that models the physical process, using Gaussian process regression techniques, and uses the measured data to:

• Better understand a physical phenomenon

• Zoom in on a dataset by creating virtual measurements

It is a software for the mathematical optimisation of generic software models based on genetic algorithms. The main purpose of the software is to allow the user to optimise the parameters of a selected Simulink model.

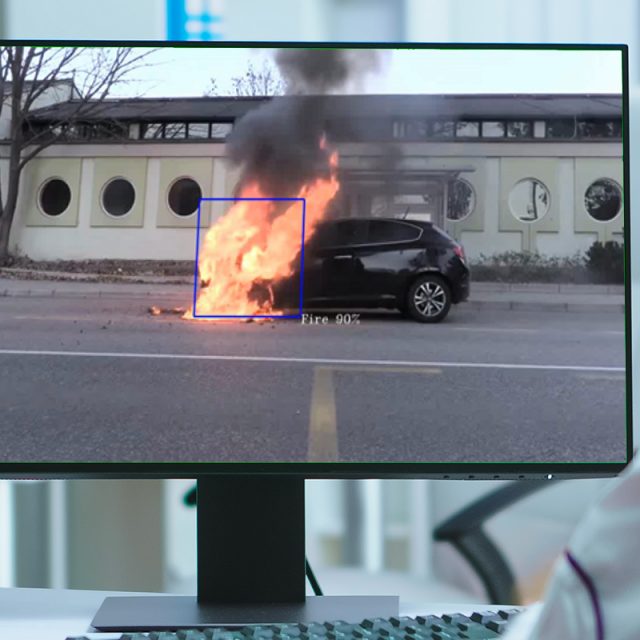

This project relates to building integrated software for on-board a vehicle for vehicle data interaction (Bus CAN etc.) and to view events received with dedicated HW. A service centre component was also developed to manage images with computer vision technologies.

Gitral is Teoresi software that allows you to recognise all typical objects present in a cluster, or more generally on an HMI, starting from screenshots taken with high resolution cameras. Gitral is able to detect:

– Icon / TellTale Status and frequency

– Level and position of the bar graph

– Text and numbers

– Gauge position

For example, the tool is useful in the HIL (Hardware In the Loop) environment to automate the HMI software validation plan.

Finally, the CAN protocol can be used to communicate with Gitral when required via specific messages or the socket protocol.